Exploring Machine Learning & Generative AI on AWS – A Webinar Recap

At REVA Academy for Corporate Excellence (RACE), we don’t just talk about emerging tech—we live it, teach it, and bring it to life for working professionals across the country.

Our recent webinar was a step further in that direction, exploring a topic that’s currently defining the future of technology and business transformation: Machine Learning and Generative AI with Cloud – specifically on AWS.

We were thrilled to welcome a diverse audience—from loyal learners who have been following our series consistently, to enthusiastic newcomers eager to kickstart their AI journey. A heartfelt thank you to each of you for being part of this learning experience.

Before diving into the session, Dr. Shinu Abi (Director – Corporate Training at RACE), welcomed the participants and shared a quick glimpse into what makes RACE a leader in industry-driven executive education.

The Need of the Hour: AI + Cloud + Speed

Dr. Shinu Abhi captured it best when she said, “Everything today is being powered not just by machine learning, but by advanced agentic frameworks and generative AI.”

From intelligent reasoning to automated decision-making, we’re seeing a massive shift across industries. But while some are already deep into implementation, others are still figuring out where to begin.

That’s where AWS comes in. With pre-trained foundational models and industry-tested pipelines, AWS helps you accelerate results—especially in client-facing environments where speed and scalability are critical.

Meet the Expert: Bismillah Kani

Our session was led by Bismillah Kani, a name well-recognized in the tech circuit for his work in AI and computer vision. An AWS evangelist and passionate researcher, Kani is not just a mentor in our M.Tech programs—he’s a driving force behind several real-world industry projects, particularly in aerospace and manufacturing where complex vision systems are needed in challenging environments.

Our session was led by Bismillah Kani, a name well-recognized in the tech circuit for his work in AI and computer vision. An AWS evangelist and passionate researcher, Kani is not just a mentor in our M.Tech programs—he’s a driving force behind several real-world industry projects, particularly in aerospace and manufacturing where complex vision systems are needed in challenging environments.

He has a flair for breaking down complex AI workflows into something tangible and actionable, making him the perfect guide for this session.

AWS SageMaker: A Fully Managed ML Service

Kani began by introducing AWS SageMaker, a fully managed service that supports the complete machine learning lifecycle—from data preparation to model deployment. With over 200 services, AWS simplifies infrastructure concerns, allowing users to focus on building applications and solving business problems.

He also shared a snapshot of the AWS ML stack:

- Infrastructure Layer: Powered by EC2 instances with CPU and GPU support for ML workloads.

- ML Services Layer: At the core lies SageMaker, surrounded by tools like SageMaker Canvas for no-code users.

- AI-as-a-Service Layer: Pre-built AI services like Amazon Translate, Comprehend, Textract, and Rekognition that users can consume via APIs.

This layered approach enables both technical and non-technical users to experiment with and adopt ML in their workflows.

Building ML Models Without Writing Code

Kani kicked off with SageMaker Canvas, a no-code interface designed for those with limited technical experience. Imagine uploading a dataset, clicking through a few buttons, and building a classification model that predicts whether a customer will buy a financial product—all without writing a single line of code. That’s what Canvas delivers.

Canvas supports data from sources like Amazon S3, Snowflake, and Athena, and it works with structured and unstructured data alike. The platform visualizes the machine learning workflow as a Directed Acyclic Graph (DAG), where each step—from data input to transformation and modeling—is laid out clearly. Features like “Get Data Insights” automatically summarize column types, detect skewed distributions, spot anomalies, and flag duplicate rows—within seconds.

As Kani showed in a live demo, data can be transformed on the fly. Need to remove irrelevant columns? Just click. Missing values? Fix them visually. But what really stood out was the chat-based exploration, powered by large language models. Users can type prompts like “plot a boxplot of job roles vs age,” and Canvas instantly generates visualizations. These insights can even be added as pipeline steps, enabling repeatable workflows.

Once the dataset is cleaned, Canvas identifies the type of ML task—be it binary classification or regression—and provides AutoML or manual model configuration. Quick Build lets you experiment rapidly using a sample dataset in under 15 minutes, while Standard Build goes deeper with the full dataset.

No matter which path you choose, Canvas returns visual metrics like F1 score and feature importance. With just a few clicks, you can run batch predictions, deploy the model as an endpoint, and even manage versions in the model registry.

Creating the Machine Learning Model

With the data prepared, the next step was to create the predictive model. Canvas supports various modeling types, including:

- Predictive analysis

- Text analysis

- Fine-tuning foundational models

- Image classification and object detection (when applicable)

After selecting the target column, Canvas automatically identified the task as a binary classification problem. It also allowed flexibility in:

- Choosing performance metrics (default is F1-score, but others can be selected)

- Using AutoML or manual model configuration

- Performing hyperparameter optimization if needed

To save time, a pre-trained model was demonstrated. It showed the user interface post-training—where users can review evaluation metrics, inspect feature importance, and iterate further.

From AutoML to Deployment

Model creation in Canvas was seamless. Users could toggle between predictive, text, or image models. Upon selecting the target variable, Canvas recognized it as a binary classification task and suggested relevant configurations and metrics.

Canvas offers Quick Build for fast iterations and Standard Build for deeper training. Once built, the model’s performance is visualized—accuracy, F1 score, and feature impact. Batch predictions and deployment can be done with a few clicks.

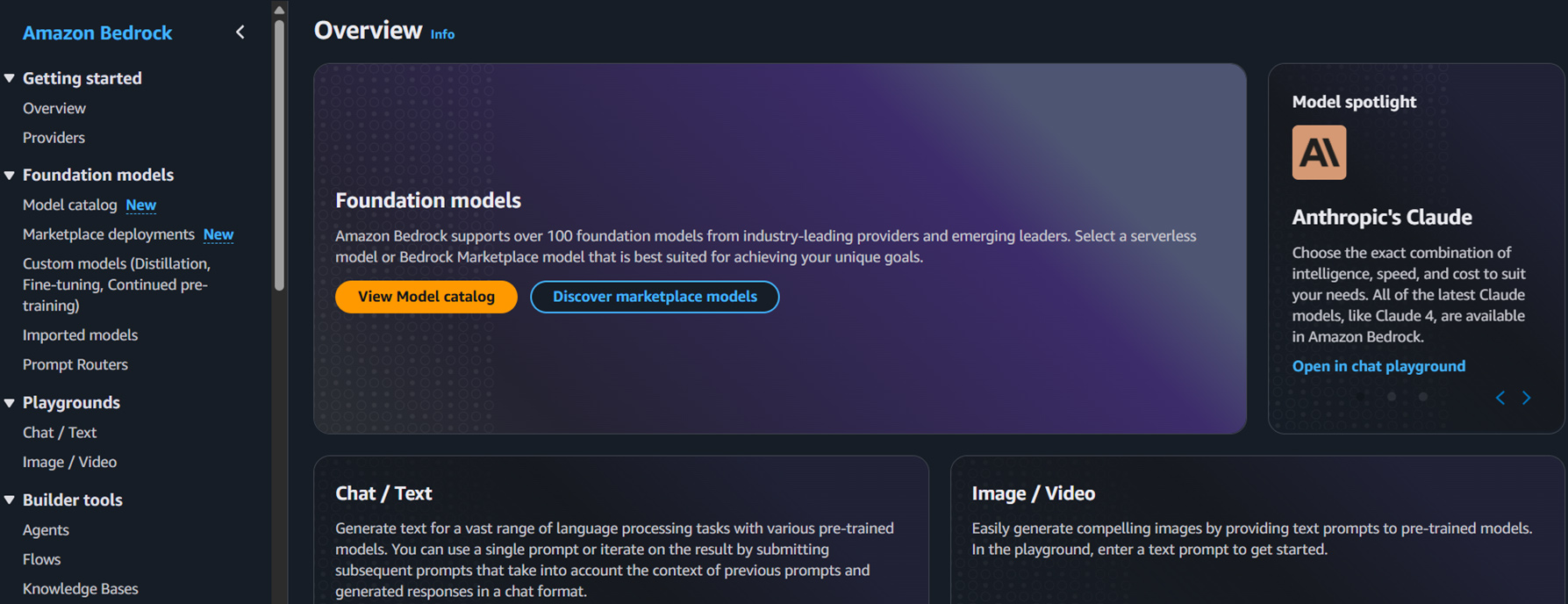

Building Generative AI Applications with Bedrock

Switching over to AWS Bedrock, Kani introduced it as a no-code, fully managed platform that connects users to foundation models from Meta, Anthropic, and Cohere. Through the Playground, users can experiment with different models and compare their behavior by tweaking temperature and top-k parameters.

A major feature highlighted was Retrieval-Augmented Generation (RAG). Since foundation models don’t know everything, RAG helps them “read” external sources like documents stored in S3 or vector databases.

AWS simplifies this with Knowledge Bases—you upload PDFs, select chunking and embedding settings (like Titan Text Embeddings v2), and Bedrock does the rest.

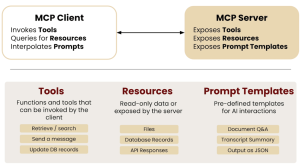

The Role of Model Context Protocols (MCP)

A significant challenge in earlier implementations was the lack of standardization in how LLMs interacted with tools and data. Each integration required separate configurations, making scaling and maintenance cumbersome.

Model Context Protocols (MCP) addressed this by introducing a standardized, client-server architecture, where:

- The MCP server hosts tools, resources, and prompt templates.

- The clients are LLMs hosted on platforms like Cloud Desktops or other environments.

This architecture simplifies interoperability, allowing LLMs to access tools such as search APIs, databases, and knowledge bases like RAG, through a unified interface.

Introducing Amazon Strands – An Open Source Agentic Framework

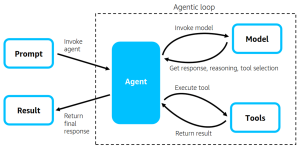

Recently, AWS introduced Strands, a model-centric, open-source agent framework. Strands agents use LLMs not only for generating responses but also for reasoning, tool selection, and task orchestration. Here’s how the process works:

- The user prompt is passed to the LLM.

- The LLM reasons out the intent behind the request.

- Based on the context, the model selects the necessary tools.

- The agent invokes these tools and gathers results.

- The model then combines the results to generate the final response.

This agentic workflow simplifies complex tasks and demonstrates how LLMs can operate in truly autonomous environments.

Conclusion: A Future Built Without Code

AWS is removing barriers to entry for machine learning and generative AI. With SageMaker Canvas and Bedrock, you no longer need a technical degree to explore, build, and deploy smart applications.

According to Statista, the global AI software market is expected to reach $126 billion by 2025.

“No-code AI isn’t just for beginners. It’s the next wave of enterprise innovation.”

— Forrester Research

For professionals looking to upskill in AI, RACE (REVA Academy for Corporate Excellence) offers advanced programs designed for real-world applications. Whether you’re a tech enthusiast or a business leader, our AI courses provide hands-on learning, expert mentorship, and industry-recognized certifications.

Our programs include the M.Tech./M.Sc. in Artificial Intelligence (AICTE/UGC Approved, Two Years Weekend Program), PG Diploma in Artificial Intelligence (AICTE/UGC Approved, One Year Weekend Program), Certified AI Engineer (100 Hours), Certified Gen AI Specialist (100 Hours), and Certified AIOPs Specialist with Azure and AWS Response Tester (100 Hours).